1. Quick Start Guide

This guide will walk you through the process of setting up and running your first Krypton ML server with a LangChain completion example.

1. Installing krypton-ml

First, you need to install the krypton-ml package. You can do this using pip:

pip install krypton-ml

Make sure you have Python 3.9 or later installed on your system.

2. Creating a LangChain Completion Example

Create a new Python file named langchain_example.py and add the following code:

from langchain_ollama.llms import OllamaLLM

from langchain.prompts import PromptTemplate

# Initialize the Ollama LLM

llm = OllamaLLM(model="llama3.2:1b")

# Create a prompt template

prompt = PromptTemplate(

input_variables=["topic"],

template="Write a short paragraph about {topic}.",

)

# Create an LLMChain

chain = prompt | llm

This example creates a simple LangChain completion chain using Ollama LLM.

3. Creating the Config File

Create a YAML configuration file named krypton_config.yaml with the following content:

krypton:

server:

port: 5000

debug: true

models:

- name: langchain-llama3.2-completion-example

type: langchain

description: llama3.2 completion example using langchain

module_path: ./examples

callable: langchain_example.completion.chain

endpoint: models/langchain/llama3.2/completion

tags:

- langchain

- example

- completion

This configuration tells Krypton ML to:

- Run the server on all available network interfaces (0.0.0.0) on port 5000

- Load the

chainfunction fromcompletion.py - Create an endpoint

models/langchain/llama3.2/completionfor this model

4. Running the Server with the Config File

Now you're ready to start the Krypton ML server. Run the following command:

krypton krypton_config.yaml

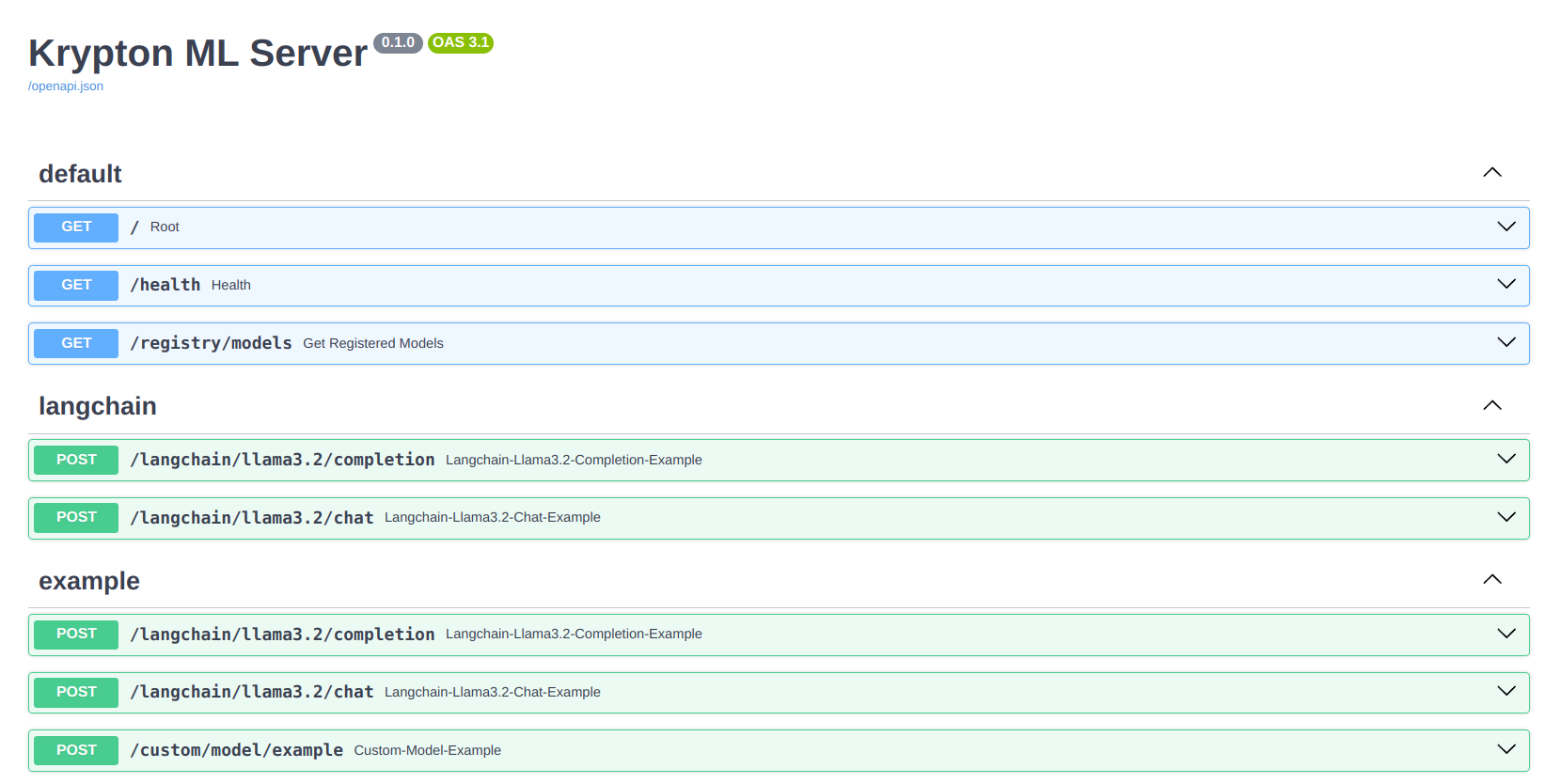

The server should start, and you'll see output indicating that it's running and which endpoints are available.

Testing Your Deployment

You can test your deployment using curl or any API client. Here's an example using curl:

curl -X POST http://localhost:5000/models/langchain/llama3.2/completion \

-H "Content-Type: application/json" \

-d '{"topic": "artificial intelligence"}'

This should return a short paragraph about artificial intelligence generated by your LangChain model.

Congratulations! You've successfully set up and run your first Krypton ML server with a LangChain completion example.